Ever noticed that the sound levels of radio stations vary? Found that some radio stations are harder to listen to than others? Here’s our guide to the broadcast chain and audio processing

This page was created following a discussion on our forum “What Governs Volume“, to help explain how a radio station’s audio gets processed before a listener gets to hear it. Some radio stations sound “louder” than others, and the same piece of music played on two different radio stations can sound different.

In many cases, it’s all about audio processing. Here’s our quick guide to how radio stations send out their audio.

The Broadcast Chain

First off, it’s important to understand how a presenter’s voice gets from the studio to your radio. Here are the basics:

Radio shows are created in a studio such as this one:

1. A broadcast radio studio mixing desk

Studio staff are trained to make sure that the “levels” that leave the studio do so at a constant level.

This is for two reasons: 1) to make sure the listener doesn’t keep having to turn their radio up or down, and b) so’s not to get distortion or to damage equipment

Staff monitor their levels using meters, and make adjustments to the levels using controls on the mixing desk. In radio, the meters used to monitor levels are called PPMs (Peak Programme Meters).

2. Peak Programme Meters on a studio mixing desk

These PPMs are calibrated on a scale of 1 to 7. Stations typically peak to “5” on this scale.

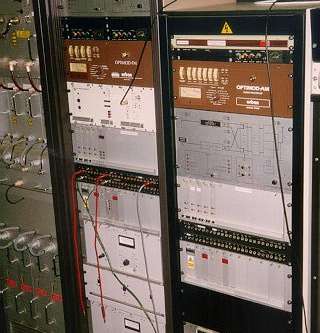

From the studio, the audio goes to a room called the “Racks Room”, where the station’s sound is processed and sent off to the transmitter site. Here is a shot of a Racks Room – some of the equipment here is for audio processing.

3. A Radio Station Racks Room

The audio leaves the studio and goes via high-quality music lines from BT to the transmitter site.

At the transmitter site, the audio is processed ready for transmission.

4. A radio station transmitter

At the top of Rack 2 and Rack 3 (pictured above) are two brown units, called OptiMod processors. These are industry-standard audio processors that adjust the sound for consistency, enhancement, equalisation and “loudness”. This dynamic audio processing happens just prior to transmission (modulation).

It is this audio processing that offends many radio listeners that prefer natural audio vs compressed audio that’s been made “louder”

So how does compression work?

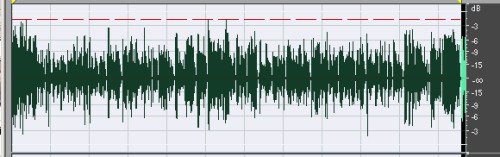

To illustrate audio compression, let’s take an example. Here is an audio waveform – this represents about 1 minute of speech. It is “natural” – i.e. uncompressed.

The red line indicates the maximum allowed level. Going over this line may result in distortion.

5. One minute of male voice, uncompressed

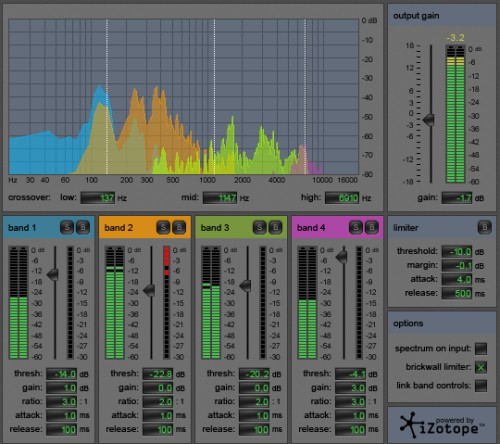

Now, we feed this through a multi-band audio processor. Here’s the processor at work:

6. A multi-band audio compressor processing that voice

The top half of the display is a spectrum analyser, showing low frequency on the left, to high frequency on the right. This processor have four independent processors, working on different bands of the audio’s dynamic range.

Most of the work is happening at the lower-end of the audio spectrum (in this example, a male voice is being processed). The orange coloured bit (Band 2) is being processed most heavily.

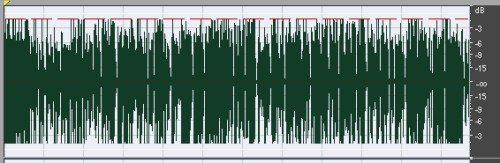

And here’s the result. A much more consistent sound that has been artificially “loudened”. This will sound more “punchy”, but not as natural as the unprocessed sound.

7. The same audio file, after dynamic audio processing

Any comments or questions? Add them to the “What Governs Volume” thread in our forum

Hi, great article. A question comes to mind is there a way to get the same levels from both of my turntables by using rack component instead of doing it manually?

Thank you.

Martin Allen